With CloudBees leaving the free online Jenkins scene, I was unable to Google up any obvious successors. Everyone seems to want cash for builds-as-a-service. It was looking increasingly likely that I would have to press some of my own hardware into service as a Jenkins host. And then I had an idea. As it turns out, one of those cloudy providers that I had previously dismissed,

OpenShift, actually is exactly what is needed here!

The

OpenShift Free Tier gives you three Small "gears" (OpenShift-speak for "machine instance"), and there's even a "cartridge" (OpenShift-speak for "template") for a Jenkins master!

There are quite a few resources to help with

setting up a Jenkins master on OpenShift, so I won't repeat them, but it was really very easy, and so far, I haven't had to tweak the configuration of that box/machine/gear/cartridge/whatever at all. Awesome stuff. The only trick was that setting up at least one build-slave is compulsory - the master won't build anything for you. Again, there are some

good pages to help you with this, and it's nothing too different to setting up a build slave on your own physical hardware - sharing SSH keys etc.

The next bit was slightly trickier; installing SBT onto an OpenShift Jenkins build slave.

This blog post gave me 95 percent of the solution, which I then tweaked to get SBT 0.13.6 from the official source. This also introduced me to the Git-driven configuration system of OpenShift, which is super-cool, and properly immutable unlike things like Puppet. The following goes in

.openshift/action_hooks/start in the Git repository for your build slave, and once you

git push, the box gets stopped, wiped, and restarted with the new start script. If you introduce an error in your

push, it gets rejected. Bliss.

cd $OPENSHIFT_DATA_DIR

if [[ -d sbt ]]; then

echo “SBT installed”

else

SBT_VERSION=0.13.6

SBT_URL="https://dl.bintray.com/sbt/native-packages/sbt/${SBT_VERSION}/sbt-${SBT_VERSION}.tgz"

echo Fetching SBT ${SBT_VERSION} from $SBT_URL

echo Installing SBT ${SBT_VERSION} to $OPENSHIFT_DATA_DIR

curl -L $SBT_URL -o sbt.tgz

tar zxvf sbt.tgz sbt

rm sbt.tgz

fi

The next hurdle was getting SBT to not die because it can't write into

$HOME on an OpenShift node, which was fixed by setting

-Duser.home=${OPENSHIFT_DATA_DIR} when invoking SBT. (

OPENSHIFT_DATA_DIR is the de-facto writeable place for persistent storage in OpenShift - you'll see it mentioned a few more times in this post)

But an "OpenShift

Small gear" build slave is slow and severely RAM-restricted - so much so that at first, I was getting heaps of these during my builds:

...

Compiling 11 Scala sources to /var/lib/openshift//app-root/data/workspace//target/scala-2.11/test-classes...

FATAL: hudson.remoting.RequestAbortedException: java.io.IOException: Unexpected termination of the channel

hudson.remoting.RequestAbortedException: hudson.remoting.RequestAbortedException: java.io.IOException: Unexpected termination of the channel

at hudson.remoting.RequestAbortedException.wrapForRethrow(RequestAbortedException.java:41)

at hudson.remoting.RequestAbortedException.wrapForRethrow(RequestAbortedException.java:34)

at hudson.remoting.Request.call(Request.java:174)

at hudson.remoting.Channel.call(Channel.java:742)

at hudson.remoting.RemoteInvocationHandler.invoke(RemoteInvocationHandler.java:168)

at com.sun.proxy.$Proxy45.join(Unknown Source)

at hudson.Launcher$RemoteLauncher$ProcImpl.join(Launcher.java:956)

at hudson.tasks.CommandInterpreter.join(CommandInterpreter.java:137)

at hudson.tasks.CommandInterpreter.perform(CommandInterpreter.java:97)

at hudson.tasks.CommandInterpreter.perform(CommandInterpreter.java:66)

at hudson.tasks.BuildStepMonitor$1.perform(BuildStepMonitor.java:20)

at hudson.model.AbstractBuild$AbstractBuildExecution.perform(AbstractBuild.java:756)

at hudson.model.Build$BuildExecution.build(Build.java:198)

at hudson.model.Build$BuildExecution.doRun(Build.java:159)

at hudson.model.AbstractBuild$AbstractBuildExecution.run(AbstractBuild.java:529)

at hudson.model.Run.execute(Run.java:1706)

at hudson.model.FreeStyleBuild.run(FreeStyleBuild.java:43)

at hudson.model.ResourceController.execute(ResourceController.java:88)

at hudson.model.Executor.run(Executor.java:232)

...

which is actually Jenkins losing contact with the build slave,

because it has exceeded the 512Mb memory limit and been forcibly terminated. The fact that it did this while compiling Scala - specifically while compiling Specs2 tests - reminds me of an

interesting investigation done about compile time that pointed out how Specs2's trait-heavy style blows compilation times (and I suspect, resources) out horrendously compared to other frameworks - but that is for another day!

If you are experiencing these errors on OpenShift, you can actually confirm that it is a "memory limit violation" by

reading a special counter that increments when the violation occurs. Note this count never resets, even if the gear is restarted, so you just need to watch for changes.

A temporary fix for these issues seemed to be running

sbt test rather than

sbt clean test; obviously this was using

just slightly less heap space and getting away with it, but I felt very nervous at the fragility of not just this "solution" but also of the resulting artifact - if I'm going to the trouble of using a CI tool to publish these things, it seems a bit stupid to not build off a clean foundation.

So after

a lot of trawling around and trying things, I found a two-fold solution to keeping an OpenShift Jenkins build slave beneath the fatal 512Mb threshold.

Firstly, remember while a build slave is executing a job there are actually

two Java processes running - the "slave communication channel" (for want of a better phrase) and the job itself. The JVM for the slave channel can safely be tuned to consume

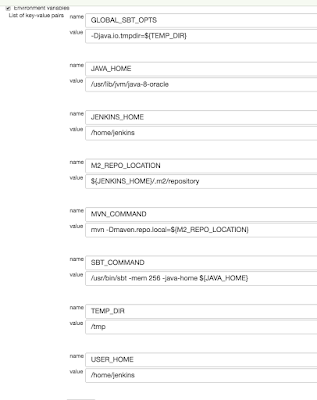

very few resources, leaving more for the "main job". So, in the Jenkins node configuration for the build slave, under the "Advanced..." button, set the "JVM Options" to:

-Duser.home=${OPENSHIFT_DATA_DIR} -XX:MaxPermSize=1M -Xmx2M -Xss128k

Secondly, set some more JVM options for SBT to use - for SBT > 0.12.0 this is most easily done by providing a

-mem argument, which will force sensible values for

-Xms, -Xmx and

-XX:MaxPermSize. Also, because "total memory used by the JVM"

can be fairly-well approximated with the equation:

Max memory = [-Xmx] + [-XX:MaxPermSize] + number_of_threads * [-Xss]

it becomes apparent that it is very important to clamp down the

Stack Size (

-Xss) as a Scala build/test cycle can spin up a lot of them. So each of my OpenShift Jenkins jobs now does this in an "Execute Shell":

export SBT_OPTS="-Duser.home=${OPENSHIFT_DATA_DIR} -Dbuild.version=$BUILD_NUMBER"

export JAVA_OPTS="-Xss128k"

# the -mem option will set -Xmx and -Xms to this number and PermGen to 2* this number

../../sbt/bin/sbt -mem 128 clean test

This combination seems to work quite nicely in the 512Mb OpenShift Small gear.