TL;DR: If you need a Heroku Jenkins Plugin that doesn't barf when you Set Properties, here you go.

CI Indistinguishable From Magic

I'm extremely happy with my OpenShift-based Jenkins CI setup that deploys to Heroku. It really does do the business, and the price simply cannot be beaten.

Know Thy Release

Too many times, at too many workplaces, I have faced the problem of trying to determine Is this the latest code? from "the front end". Determined not to have this problem in my own apps, I've been employing a couple of tricks for a few years now that give excellent traceability.

Firstly, I use the nifty sbt-buildinfo plugin that allows build-time values to be injected into source code. A perfect match for Jenkins builds, it creates a Scala object that can then be accessed as if it contained hard-coded values. Here's what I put in my build.sbt:

buildInfoSettings

sourceGenerators in Compile <+= buildInfo

buildInfoKeys := Seq[BuildInfoKey](name, version, scalaVersion, sbtVersion)

// Injected via Jenkins - these props are set at build time:

buildInfoKeys ++= Seq[BuildInfoKey](

"extraInfo" -> scala.util.Properties.envOrElse("EXTRA_INFO", "N/A"),

"builtBy" -> scala.util.Properties.envOrElse("NODE_NAME", "N/A"),

"builtAt" -> new java.util.Date().toString)

buildInfoPackage := "com.themillhousegroup.myproject.utils"

The Jenkins Wiki has a really useful

list of available properties which you can plunder to your heart's content. It's definitely well worth creating a health or build-info page that exposes these.

Adding Value with the Heroku Jenkins Plugin

Although Heroku works spectacularly well with a simple git push, the Heroku Jenkins Plugin adds a couple of extra tricks that are very worthwhile, such as being able to place your app into/out-of "maintenance mode" - but the most pertinent here is the Heroku: Set Configuration build step. Adding this step to your build allows you to set any number of environment variables in the Heroku App that you are about to push to. You can imagine how useful this is when combined with the sbt-buildinfo plugin described above!

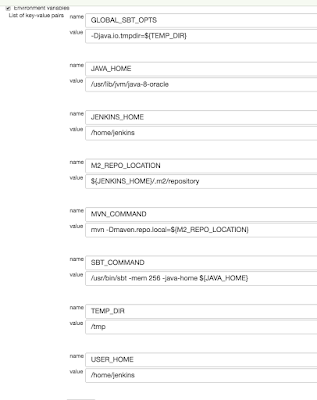

Here's what it looks like for one of my projects, where the built Play project is pushed to a test environment on Heroku:

Notice how I set

HEROKU_ENV, which I then use in my app to determine whether key features (for example, Google Analytics) are enabled or not.

Here are a couple of helper classes that I've used repeatedly (ooh! time for a new library!) in my Heroku projects for this purpose:

import scala.util.Properties

object EnvNames {

val DEV = "dev"

val TEST = "test"

val PROD = "prod"

val STAGE = "stage"

}

object HerokuApp {

lazy val herokuEnv = Properties.envOrElse("HEROKU_ENV", EnvNames.DEV)

lazy val isProd = (EnvNames.PROD == herokuEnv)

lazy val isStage = (EnvNames.STAGE == herokuEnv)

lazy val isDev = (EnvNames.DEV == herokuEnv)

def ifProd[T](prod:T):Option[T] = if (isProd) Some(prod) else None

def ifProdElse[T](prod:T, nonProd:T):T = {

if (isProd) prod else nonProd

}

}

... And then it all went pear-shaped

I had quite a number of Play 2.x apps using this Jenkins+Heroku+BuildInfo arrangement to great success. But then at some point (around September 2015 as far as I can tell) the Heroku Jenkins Plugin started throwing an exception while trying to Set Configuration. For the benefit of any desperate Google-trawlers, it looks like this:

at com.heroku.api.parser.Json.parse(Json.java:73)

at com.heroku.api.request.releases.ListReleases.getResponse(ListReleases.java:63)

at com.heroku.api.request.releases.ListReleases.getResponse(ListReleases.java:22)

at com.heroku.api.connection.JerseyClientAsyncConnection$1.handleResponse(JerseyClientAsyncConnection.java:79)

at com.heroku.api.connection.JerseyClientAsyncConnection$1.get(JerseyClientAsyncConnection.java:71)

at com.heroku.api.connection.JerseyClientAsyncConnection.execute(JerseyClientAsyncConnection.java:87)

at com.heroku.api.HerokuAPI.listReleases(HerokuAPI.java:296)

at com.heroku.ConfigAdd.perform(ConfigAdd.java:55)

at com.heroku.AbstractHerokuBuildStep.perform(AbstractHerokuBuildStep.java:114)

at com.heroku.ConfigAdd.perform(ConfigAdd.java:22)

at hudson.tasks.BuildStepMonitor$1.perform(BuildStepMonitor.java:20)

at hudson.model.AbstractBuild$AbstractBuildExecution.perform(AbstractBuild.java:761)

at hudson.model.Build$BuildExecution.build(Build.java:203)

at hudson.model.Build$BuildExecution.doRun(Build.java:160)

at hudson.model.AbstractBuild$AbstractBuildExecution.run(AbstractBuild.java:536)

at hudson.model.Run.execute(Run.java:1741)

at hudson.model.FreeStyleBuild.run(FreeStyleBuild.java:43)

at hudson.model.ResourceController.execute(ResourceController.java:98)

at hudson.model.Executor.run(Executor.java:374)

Caused by: com.heroku.api.exception.ParseException: Unable to parse data.

at com.heroku.api.parser.JerseyClientJsonParser.parse(JerseyClientJsonParser.java:24)

at com.heroku.api.parser.Json.parse(Json.java:70)

... 18 more

Caused by: org.codehaus.jackson.map.JsonMappingException: Can not deserialize instance of java.lang.String out of START_OBJECT token

at [Source: [B@176e40b; line: 1, column: 473] (through reference chain: com.heroku.api.Release["pstable"])

at org.codehaus.jackson.map.JsonMappingException.from(JsonMappingException.java:160)

at org.codehaus.jackson.map.deser.StdDeserializationContext.mappingException(StdDeserializationContext.java:198)

at org.codehaus.jackson.map.deser.StdDeserializer$StringDeserializer.deserialize(StdDeserializer.java:656)

at org.codehaus.jackson.map.deser.StdDeserializer$StringDeserializer.deserialize(StdDeserializer.java:625)

at org.codehaus.jackson.map.deser.MapDeserializer._readAndBind(MapDeserializer.java:235)

at org.codehaus.jackson.map.deser.MapDeserializer.deserialize(MapDeserializer.java:165)

at org.codehaus.jackson.map.deser.MapDeserializer.deserialize(MapDeserializer.java:25)

at org.codehaus.jackson.map.deser.SettableBeanProperty.deserialize(SettableBeanProperty.java:230)

at org.codehaus.jackson.map.deser.SettableBeanProperty$MethodProperty.deserializeAndSet(SettableBeanProperty.java:334)

at org.codehaus.jackson.map.deser.BeanDeserializer.deserializeFromObject(BeanDeserializer.java:495)

at org.codehaus.jackson.map.deser.BeanDeserializer.deserialize(BeanDeserializer.java:351)

at org.codehaus.jackson.map.deser.CollectionDeserializer.deserialize(CollectionDeserializer.java:116)

at org.codehaus.jackson.map.deser.CollectionDeserializer.deserialize(CollectionDeserializer.java:93)

at org.codehaus.jackson.map.deser.CollectionDeserializer.deserialize(CollectionDeserializer.java:25)

at org.codehaus.jackson.map.ObjectMapper._readMapAndClose(ObjectMapper.java:2131)

at org.codehaus.jackson.map.ObjectMapper.readValue(ObjectMapper.java:1481)

at com.heroku.api.parser.JerseyClientJsonParser.parse(JerseyClientJsonParser.java:22)

... 19 more

Build step 'Heroku: Set Configuration' marked build as failure

Effectively, it looks like Heroku has changed the structure of their

pstable object, and that the baked-into-a-JAR definition of it (

Map<String, String> in Java) will no longer work.

Open-Source to the rescue

Although the Java APIs for Heroku have been untouched since 2012, and indeed the

Jenkins Plugin itself was announced deprecated (without a suggested replacement) only a week ago, fortunately the whole shebang is open-source on Github so I took it upon myself to download the code and fix this thing. A

lot of swearing, further downloading of increasingly-obscure Heroku libraries and general hacking later, and not only is the

bug fixed:

- Map<String, String> pstable;

+ Map<String, Object> pstable;

But there are

new tests to prove it, and a

new Heroku Jenkins Plugin available here now. Grab this binary, and go to Manage Jenkins -> Manage Plugins -> Advanced -> Upload Plugin and drop it in. Reboot Jenkins, and you're all set.