Thursday, 25 January 2018

OpenShift - the 'f' is silent

After almost-exactly four years of free-tier OpenShift usage for Jenkins purposes, I have finally had to throw up my hands and declare it unworkable.

The first concern was earlier in 2017 when, with minimal notice, they announced the end-of-life of the OpenShift 2.0 platform which was serving me so well. Simultaneously they dropped the number of nodes available to free-tier customers from 3 to 1. A move I would have been fine with if there had been any way for me to pay them down here in Australia - a fact I lamented about almost 2 years ago.

Then, in the big "upgrade" to version 3, OpenShift disposed of what I considered to be their best feature - having the configuration of a node held under version control in Git; push a change, the node restarts with the new config. Awesome. Instead, version 3 handed us a complex new ecosystem of pods, containers, services, images, controllers, registries and applications, administered through a labyrinth of somewhat-complete and occasionally-buggy web pages. Truly a downgrade from my perspective.

The final straw was the extraordinarily fragile and flaky nature of the one-and-only node (or is it "pod"? Or "application"? I can't even tell any more) that I have running as a Jenkins master. Now this is hardly a taxing thing to run - I have a $5-per-month Vultr instance actually being a slave and doing real work - yet it seems to be unable to stay up reliably while doing such simple tasks as changing a job's configuration. It also makes "continuous integration" a bit of a joke if pushing to a repository doesn't actually end up running tests and building a new artefact because the node was unresponsive to the webhook from Github/Bitbucket. Sigh.

You can imagine how great it is to see this page when you've just hit "save" on the meticulously-detailed configuration for a brand new Jenkins job...

So, in what I hope is not a taste of things to come, I'm de-clouding my Jenkins instance and moving it back to the only "on-premises" bit of "server hardware" I still own - my Synology DS209 NAS. Stay tuned.

Saturday, 30 July 2016

Vultr Jenkins Slave GO!

I selected a "20Gb SSD / 1024Mb" instance located in "Silicon Valley" for my slave. Being on the opposite side of the US to my OpenShift boxes feels like a small, but important factor in preventing total catastrophe in the event of a datacenter outage.

Setup Steps

(All these steps should be performed as root):User and access

Create a jenkins user:addgroup jenkins adduser jenkins --ingroup jenkins

Now grab the id_rsa.pub from your Jenkins master's .ssh directory and put it into /home/jenkins/.ssh/authorized_keys. In the Jenkins UI, set up a new set of credentials corresponding to this, using "use a file from the Jenkins master .ssh". (which by the way, on OpenShift will be located at /var/lib/openshift/{userid}/app-root/data/.ssh/jenkins_id_rsa).

I like to keep things organised, so I made a vultr.com "domain" container and then created the credentials inside.

Install Java

echo "deb http://ppa.launchpad.net/webupd8team/java/ubuntu xenial main" | tee /etc/apt/sources.list.d/webupd8team-java.list echo "deb-src http://ppa.launchpad.net/webupd8team/java/ubuntu xenial main" | tee -a /etc/apt/sources.list.d/webupd8team-java.list apt-key adv --keyserver hkp://keyserver.ubuntu.com:80 --recv-keys EEA14886 apt-get update apt-get install oracle-java8-installer

Install SBT

apt-get install apt-transport-https echo "deb https://dl.bintray.com/sbt/debian /" | tee -a /etc/apt/sources.list.d/sbt.list apt-key adv --keyserver hkp://keyserver.ubuntu.com:80 --recv 642AC823 apt-get update apt-get install sbt

More useful bits

Git

apt-get install git

NodeJS

curl -sL https://deb.nodesource.com/setup_4.x | bash - apt-get install nodejs

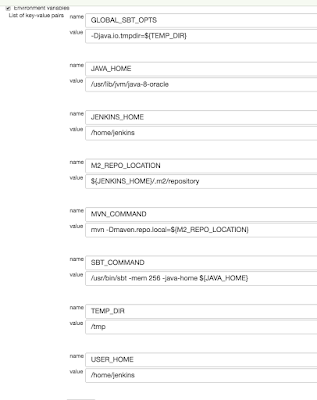

This machine is quite dramatically faster and has twice the RAM of my usual OpenShift nodes, making it extra-important to have those differences defined per-node instead of hard-coded into a job. One thing I was surprised to have to define was a memory limit for the JVM (via SBT's -mem argument) as I was getting "There is insufficient memory for the Java Runtime Environment to continue" errors when letting it choose its own upper limit. For posterity, here are the environment variables I have configured for my Vultr slave:

Wednesday, 27 April 2016

Happy and Healthy Heterogeneous Build Slaves in Jenkins

So a second slave was brought on line - my old Dell Inspiron 9300 laptop from 2006 - which (after an upgrade to 2Gb of RAM for a handful of dollars online) has done a sterling job. Running Ubuntu 14.04 Desktop edition seems to not tax the Intel Pentium M too badly, and it seemed crazy to get rid of that amazing 17" 1920x1200 screen for a pittance on eBay. Now at this point I had two slaves on line, with highly different capabilities.

Horses for Courses

The OpenShift node (slave1) has low RAM, slow CPU, very limited persistent storage but exceptionally quick network access (being located in a datacenter somewhere on the US East Coast), while the laptop (slave2) has a reasonable amount of RAM, moderate CPU, tons of disk but relatively slow transfer rates to the outside world, via ADSL2 down here in Australia. How to deal with all these differences when running jobs that could be farmed out to either node?The solution is of course the classic layer of indirection that allows the different boxes to be addressed consistently. Here is the configuration for my slave1 Redhat box on OpenShift:

Note the -mem argument in the SBT_COMMAND which sets the -Xmx and -Xms to this number and PermGen to 2* this number, keeping a lid on resource usage. And here's slave2, the Ubuntu laptop, with no such restriction needed:

And here's what a typical build job looks like:

Caring for Special-needs Nodes

Finally, my disk-challenged slave1 node gets a couple of Jenkins jobs to tend to it. The first periodically runs a git gc in each .git directory under the Jenkins workspace (as per a Stack Overflow answer) - it runs quota before-and-after to show how much (if anything) was cleared up:The second job periodically removes the target directory wherever it is found - SBT builds leave a lot of stuff in here that can really add up. Here's what it looks like:

Friday, 4 March 2016

Unbreaking the Heroku Jenkins Plugin

CI Indistinguishable From Magic

I'm extremely happy with my OpenShift-based Jenkins CI setup that deploys to Heroku. It really does do the business, and the price simply cannot be beaten.

Know Thy Release

Too many times, at too many workplaces, I have faced the problem of trying to determine Is this the latest code? from "the front end". Determined not to have this problem in my own apps, I've been employing a couple of tricks for a few years now that give excellent traceability.

Firstly, I use the nifty sbt-buildinfo plugin that allows build-time values to be injected into source code. A perfect match for Jenkins builds, it creates a Scala object that can then be accessed as if it contained hard-coded values. Here's what I put in my build.sbt:

buildInfoSettings

sourceGenerators in Compile <+= buildInfo

buildInfoKeys := Seq[BuildInfoKey](name, version, scalaVersion, sbtVersion)

// Injected via Jenkins - these props are set at build time:

buildInfoKeys ++= Seq[BuildInfoKey](

"extraInfo" -> scala.util.Properties.envOrElse("EXTRA_INFO", "N/A"),

"builtBy" -> scala.util.Properties.envOrElse("NODE_NAME", "N/A"),

"builtAt" -> new java.util.Date().toString)

buildInfoPackage := "com.themillhousegroup.myproject.utils"

The Jenkins Wiki has a really useful list of available properties which you can plunder to your heart's content. It's definitely well worth creating a health or build-info page that exposes these.

Adding Value with the Heroku Jenkins Plugin

Although Heroku works spectacularly well with a simple git push, the Heroku Jenkins Plugin adds a couple of extra tricks that are very worthwhile, such as being able to place your app into/out-of "maintenance mode" - but the most pertinent here is the Heroku: Set Configuration build step. Adding this step to your build allows you to set any number of environment variables in the Heroku App that you are about to push to. You can imagine how useful this is when combined with the sbt-buildinfo plugin described above!

Here's what it looks like for one of my projects, where the built Play project is pushed to a test environment on Heroku:

Notice how I set HEROKU_ENV, which I then use in my app to determine whether key features (for example, Google Analytics) are enabled or not.Here are a couple of helper classes that I've used repeatedly (ooh! time for a new library!) in my Heroku projects for this purpose:

import scala.util.Properties

object EnvNames {

val DEV = "dev"

val TEST = "test"

val PROD = "prod"

val STAGE = "stage"

}

object HerokuApp {

lazy val herokuEnv = Properties.envOrElse("HEROKU_ENV", EnvNames.DEV)

lazy val isProd = (EnvNames.PROD == herokuEnv)

lazy val isStage = (EnvNames.STAGE == herokuEnv)

lazy val isDev = (EnvNames.DEV == herokuEnv)

def ifProd[T](prod:T):Option[T] = if (isProd) Some(prod) else None

def ifProdElse[T](prod:T, nonProd:T):T = {

if (isProd) prod else nonProd

}

}

... And then it all went pear-shaped

I had quite a number of Play 2.x apps using this Jenkins+Heroku+BuildInfo arrangement to great success. But then at some point (around September 2015 as far as I can tell) the Heroku Jenkins Plugin started throwing an exception while trying to Set Configuration. For the benefit of any desperate Google-trawlers, it looks like this:

at com.heroku.api.parser.Json.parse(Json.java:73) at com.heroku.api.request.releases.ListReleases.getResponse(ListReleases.java:63) at com.heroku.api.request.releases.ListReleases.getResponse(ListReleases.java:22) at com.heroku.api.connection.JerseyClientAsyncConnection$1.handleResponse(JerseyClientAsyncConnection.java:79) at com.heroku.api.connection.JerseyClientAsyncConnection$1.get(JerseyClientAsyncConnection.java:71) at com.heroku.api.connection.JerseyClientAsyncConnection.execute(JerseyClientAsyncConnection.java:87) at com.heroku.api.HerokuAPI.listReleases(HerokuAPI.java:296) at com.heroku.ConfigAdd.perform(ConfigAdd.java:55) at com.heroku.AbstractHerokuBuildStep.perform(AbstractHerokuBuildStep.java:114) at com.heroku.ConfigAdd.perform(ConfigAdd.java:22) at hudson.tasks.BuildStepMonitor$1.perform(BuildStepMonitor.java:20) at hudson.model.AbstractBuild$AbstractBuildExecution.perform(AbstractBuild.java:761) at hudson.model.Build$BuildExecution.build(Build.java:203) at hudson.model.Build$BuildExecution.doRun(Build.java:160) at hudson.model.AbstractBuild$AbstractBuildExecution.run(AbstractBuild.java:536) at hudson.model.Run.execute(Run.java:1741) at hudson.model.FreeStyleBuild.run(FreeStyleBuild.java:43) at hudson.model.ResourceController.execute(ResourceController.java:98) at hudson.model.Executor.run(Executor.java:374) Caused by: com.heroku.api.exception.ParseException: Unable to parse data. at com.heroku.api.parser.JerseyClientJsonParser.parse(JerseyClientJsonParser.java:24) at com.heroku.api.parser.Json.parse(Json.java:70) ... 18 more Caused by: org.codehaus.jackson.map.JsonMappingException: Can not deserialize instance of java.lang.String out of START_OBJECT token at [Source: [B@176e40b; line: 1, column: 473] (through reference chain: com.heroku.api.Release["pstable"]) at org.codehaus.jackson.map.JsonMappingException.from(JsonMappingException.java:160) at org.codehaus.jackson.map.deser.StdDeserializationContext.mappingException(StdDeserializationContext.java:198) at org.codehaus.jackson.map.deser.StdDeserializer$StringDeserializer.deserialize(StdDeserializer.java:656) at org.codehaus.jackson.map.deser.StdDeserializer$StringDeserializer.deserialize(StdDeserializer.java:625) at org.codehaus.jackson.map.deser.MapDeserializer._readAndBind(MapDeserializer.java:235) at org.codehaus.jackson.map.deser.MapDeserializer.deserialize(MapDeserializer.java:165) at org.codehaus.jackson.map.deser.MapDeserializer.deserialize(MapDeserializer.java:25) at org.codehaus.jackson.map.deser.SettableBeanProperty.deserialize(SettableBeanProperty.java:230) at org.codehaus.jackson.map.deser.SettableBeanProperty$MethodProperty.deserializeAndSet(SettableBeanProperty.java:334) at org.codehaus.jackson.map.deser.BeanDeserializer.deserializeFromObject(BeanDeserializer.java:495) at org.codehaus.jackson.map.deser.BeanDeserializer.deserialize(BeanDeserializer.java:351) at org.codehaus.jackson.map.deser.CollectionDeserializer.deserialize(CollectionDeserializer.java:116) at org.codehaus.jackson.map.deser.CollectionDeserializer.deserialize(CollectionDeserializer.java:93) at org.codehaus.jackson.map.deser.CollectionDeserializer.deserialize(CollectionDeserializer.java:25) at org.codehaus.jackson.map.ObjectMapper._readMapAndClose(ObjectMapper.java:2131) at org.codehaus.jackson.map.ObjectMapper.readValue(ObjectMapper.java:1481) at com.heroku.api.parser.JerseyClientJsonParser.parse(JerseyClientJsonParser.java:22) ... 19 more Build step 'Heroku: Set Configuration' marked build as failureEffectively, it looks like Heroku has changed the structure of their pstable object, and that the baked-into-a-JAR definition of it (Map<String, String> in Java) will no longer work.

Open-Source to the rescue

Although the Java APIs for Heroku have been untouched since 2012, and indeed the Jenkins Plugin itself was announced deprecated (without a suggested replacement) only a week ago, fortunately the whole shebang is open-source on Github so I took it upon myself to download the code and fix this thing. A lot of swearing, further downloading of increasingly-obscure Heroku libraries and general hacking later, and not only is the bug fixed:- Map<String, String> pstable; + Map<String, Object> pstable;But there are new tests to prove it, and a new Heroku Jenkins Plugin available here now. Grab this binary, and go to Manage Jenkins -> Manage Plugins -> Advanced -> Upload Plugin and drop it in. Reboot Jenkins, and you're all set.

Friday, 26 February 2016

Making better software with Github

This solved my problem, in that I no longer ran out of PermGen on my build slave. But the repercussions were far-reaching. Any decent public-facing library needs documentation, and Github's README.md is an incredibly convenient place to put it all. I've lost count of the number of times I've found myself reading my own documentation up there on Github; if Arallon was still a hodge-podge of classes within my application, I'd have spent hours trying to deduce my own functionality ...

Of course, a decent open-source library must also have excellent tests and test coverage. Splitting Arallon into its own library gave the tests a new-found focus and similarly the test coverage (measured with JaCoCo) was much more significant.

Since that first library split, I've peeled off many other utility libraries from private projects; almost always things to make Play2 app development a little quicker and/or easier:

- play2-reactivemongo-mocks - Mocking out a ReactiveMongo persistence layer

- play2-mailgun - Easily send email via MailGun's API

- pac4j-underarmour - Integrates UnderArmour (aka MapMyRun) into the pac4j authentication framework

- mondrian - A super-simple CRUD layer for Play + ReactiveMongo

As a shameless plug, I use yet another of my own projects (I love my own dogfood!), sbt-skeleton to set up a brand new SBT project with tons of useful defaults like dependencies, repository locations, plugins etc as well as a skeleton directory structure. This helps make the decision to extract a library a no-brainer; I can have a library up-and-building, from scratch, in minutes. This includes having it build and publish to BinTray, which is simply just a matter of cloning an existing Jenkins job and changing the name of the source Github repo.

I've found the implied peer-pressure of having code "out there" for public scrutiny has a strong positive effect on my overall software quality. I'm sure I'm not the only one. I highly recommend going through the process of extracting something re-usable from private code and open-sourcing it into a library you are prepared to stand behind. It will make you a better software developer in many ways.

* This is not a criticism of OpenShift; I love them and would gladly pay them money if they would only take my puny Australian dollars :-(

Friday, 12 December 2014

Walking away from CloudBees Part 5 - Publishing and Fine-Tuning

Publishing private artefacts to a private Nexus repository

As per my new world order diagram, I decided to use my third and final free OpenShift node as a Nexus box, and what a great move that turned out to be. Without a doubt the easiest setup of a Nexus box I've ever experienced:- Log in to OpenShift

- Click theAdd Application... button

- Scroll down to the Code Anything heading, and paste http://nexuscartridge-openshiftci.rhcloud.com/ into the URL textbox

- Click Next, nominate the URL for the box, and wait a few minutes

Publishing open-source artefacts to a public repository

As all of my open-source efforts are now written in Scala with SBT as the build tool, it was a simple matter to add the bintray-sbt plugin to each of them, allowing publication to BinTray, or more specifically, The Millhouse Group's little corner of it.The only trick here was SSHing into the Jenkins Build slave (one time) and adding an ${OPENSHIFT_DATA_DIR}/.bintray/.credentials file so that an sbt publish would succeed.

Deployment of webapps to Heroku

As with most things open and/or free, someone has been here before - this blog post, together with the Heroku Jenkins Plugin README were a very good starting point for getting this all working.In brief, the steps are:

- Install the Heroku and Git Publisher Jenkins plugins

- Grab your Heroku API key from your Account Settings page, and put it into Manage Jenkins -> Configure System -> Heroku -> API Key

- Grab the details of the Heroku remote from your .git/config in your local repo, or from the "Git URL" in the Info on your app's Settings page on Heroku.

- Set this up as an additional Git repo in your Jenkins build, and name it heroku. For safety, I like to name my other repo (i.e. the one holding the source that triggers builds) appropriately as well; it avoids confusion.

- Actual example:

- I name my source repo bitbucket

- Thus my Branch Specifier is bitbucket/master

- Add a new Git Publisher Post-Build Action, that pushes to heroku/master when the build succeeds

Fine-tuning the OpenShift build setup

Having to do "Layer-8" timezone conversion when reading build logs is just annoying so put the slave node into your local time zone by navigating to (Manage Jenkins -> Manage Nodes -> Slave -> Configure icon -> Launch Method -> Advanced -> JVM Options) (phew!) and setting it to:

-Duser.home=${OPENSHIFT_DATA_DIR} -Duser.timezone="Australia/Melbourne" -XX:MaxPermSize=1M -Xmx2M -Xss128k

(You might need to consult the list of Java timezone ids)

The final pieces of the puzzle were the configuring the "final destinations" of my private artifacts (my gets sent to BinTray courtesy of the bintray-sbt plugin). Details follow.

After that, a little bit of futzing around to get auto-triggered builds working from both GitHub and BitBucket, and I had everything back to normal, or possibly, even better - I now have unlimited app slots on Heroku versus four on CloudBees - and I'm somewhat insulated from outages of a single provider. Happy!

Tuesday, 4 November 2014

Walking away from CloudBees Episode 4: A New Hope

The OpenShift Free Tier gives you three Small "gears" (OpenShift-speak for "machine instance"), and there's even a "cartridge" (OpenShift-speak for "template") for a Jenkins master!

There are quite a few resources to help with setting up a Jenkins master on OpenShift, so I won't repeat them, but it was really very easy, and so far, I haven't had to tweak the configuration of that box/machine/gear/cartridge/whatever at all. Awesome stuff. The only trick was that setting up at least one build-slave is compulsory - the master won't build anything for you. Again, there are some good pages to help you with this, and it's nothing too different to setting up a build slave on your own physical hardware - sharing SSH keys etc.

The next bit was slightly trickier; installing SBT onto an OpenShift Jenkins build slave. This blog post gave me 95 percent of the solution, which I then tweaked to get SBT 0.13.6 from the official source. This also introduced me to the Git-driven configuration system of OpenShift, which is super-cool, and properly immutable unlike things like Puppet. The following goes in .openshift/action_hooks/start in the Git repository for your build slave, and once you git push, the box gets stopped, wiped, and restarted with the new start script. If you introduce an error in your push, it gets rejected. Bliss.

cd $OPENSHIFT_DATA_DIR

if [[ -d sbt ]]; then

echo “SBT installed”

else

SBT_VERSION=0.13.6

SBT_URL="https://dl.bintray.com/sbt/native-packages/sbt/${SBT_VERSION}/sbt-${SBT_VERSION}.tgz"

echo Fetching SBT ${SBT_VERSION} from $SBT_URL

echo Installing SBT ${SBT_VERSION} to $OPENSHIFT_DATA_DIR

curl -L $SBT_URL -o sbt.tgz

tar zxvf sbt.tgz sbt

rm sbt.tgz

fi

The next hurdle was getting SBT to not die because it can't write into $HOME on an OpenShift node, which was fixed by setting -Duser.home=${OPENSHIFT_DATA_DIR} when invoking SBT. (OPENSHIFT_DATA_DIR is the de-facto writeable place for persistent storage in OpenShift - you'll see it mentioned a few more times in this post)

But an "OpenShift Small gear" build slave is slow and severely RAM-restricted - so much so that at first, I was getting heaps of these during my builds:

... Compiling 11 Scala sources to /var/lib/openshift/which is actually Jenkins losing contact with the build slave, because it has exceeded the 512Mb memory limit and been forcibly terminated. The fact that it did this while compiling Scala - specifically while compiling Specs2 tests - reminds me of an interesting investigation done about compile time that pointed out how Specs2's trait-heavy style blows compilation times (and I suspect, resources) out horrendously compared to other frameworks - but that is for another day!/app-root/data/workspace/ /target/scala-2.11/test-classes... FATAL: hudson.remoting.RequestAbortedException: java.io.IOException: Unexpected termination of the channel hudson.remoting.RequestAbortedException: hudson.remoting.RequestAbortedException: java.io.IOException: Unexpected termination of the channel at hudson.remoting.RequestAbortedException.wrapForRethrow(RequestAbortedException.java:41) at hudson.remoting.RequestAbortedException.wrapForRethrow(RequestAbortedException.java:34) at hudson.remoting.Request.call(Request.java:174) at hudson.remoting.Channel.call(Channel.java:742) at hudson.remoting.RemoteInvocationHandler.invoke(RemoteInvocationHandler.java:168) at com.sun.proxy.$Proxy45.join(Unknown Source) at hudson.Launcher$RemoteLauncher$ProcImpl.join(Launcher.java:956) at hudson.tasks.CommandInterpreter.join(CommandInterpreter.java:137) at hudson.tasks.CommandInterpreter.perform(CommandInterpreter.java:97) at hudson.tasks.CommandInterpreter.perform(CommandInterpreter.java:66) at hudson.tasks.BuildStepMonitor$1.perform(BuildStepMonitor.java:20) at hudson.model.AbstractBuild$AbstractBuildExecution.perform(AbstractBuild.java:756) at hudson.model.Build$BuildExecution.build(Build.java:198) at hudson.model.Build$BuildExecution.doRun(Build.java:159) at hudson.model.AbstractBuild$AbstractBuildExecution.run(AbstractBuild.java:529) at hudson.model.Run.execute(Run.java:1706) at hudson.model.FreeStyleBuild.run(FreeStyleBuild.java:43) at hudson.model.ResourceController.execute(ResourceController.java:88) at hudson.model.Executor.run(Executor.java:232) ...

If you are experiencing these errors on OpenShift, you can actually confirm that it is a "memory limit violation" by reading a special counter that increments when the violation occurs. Note this count never resets, even if the gear is restarted, so you just need to watch for changes.

A temporary fix for these issues seemed to be running sbt test rather than sbt clean test; obviously this was using just slightly less heap space and getting away with it, but I felt very nervous at the fragility of not just this "solution" but also of the resulting artifact - if I'm going to the trouble of using a CI tool to publish these things, it seems a bit stupid to not build off a clean foundation.

So after a lot of trawling around and trying things, I found a two-fold solution to keeping an OpenShift Jenkins build slave beneath the fatal 512Mb threshold.

Firstly, remember while a build slave is executing a job there are actually two Java processes running - the "slave communication channel" (for want of a better phrase) and the job itself. The JVM for the slave channel can safely be tuned to consume very few resources, leaving more for the "main job". So, in the Jenkins node configuration for the build slave, under the "Advanced..." button, set the "JVM Options" to:

-Duser.home=${OPENSHIFT_DATA_DIR} -XX:MaxPermSize=1M -Xmx2M -Xss128k

Secondly, set some more JVM options for SBT to use - for SBT > 0.12.0 this is most easily done by providing a -mem argument, which will force sensible values for -Xms, -Xmx and -XX:MaxPermSize. Also, because "total memory used by the JVM" can be fairly-well approximated with the equation:

export SBT_OPTS="-Duser.home=${OPENSHIFT_DATA_DIR} -Dbuild.version=$BUILD_NUMBER"

export JAVA_OPTS="-Xss128k"

# the -mem option will set -Xmx and -Xms to this number and PermGen to 2* this number

../../sbt/bin/sbt -mem 128 clean test

This combination seems to work quite nicely in the 512Mb OpenShift Small gear.

Saturday, 1 November 2014

Walking away from Run@Cloud Part 3: Pause and Reflect

As CloudBees seem to have gone "Enterprise" in the worst possible way (from my perspective) and don't have any free offerings any more, I was now looking for:

- Git repository hosting (for private repos - my open-source stuff is on GitHub)

- A private Nexus instance to hold closed-source library artifacts

- A public Nexus instance to hold open-source artifacts for public consumption

- A "cloud" Jenkins instance to build both public- and private-repo-code when it changes;

- pushing private webapps to Heroku

- publishing private libs to the private Nexus

- pushing open-source libs to the public Nexus

I did a load of Googling, and the result of this is an ecosystem that is far more "diverse" (a charitable way to say "dog's breakfast") but still satisfies all of the above criteria, and it's all free. More detail in blog posts to come, but here's what I've come up with: