The next phase of "greening" my home was monitoring the temperatures at various points in the house. After previous successful encounters with cheap Chinese WiFi power points, I was interested in seeing if I could perform similar OpenHAB-hacks on something a little more complex - the BroadLink A1 Air Quality sensor - obtained, as usual, from eBay at a very reasonable price.

These devices, like those before them, have dubious reputations for "phoning home" to random Chinese clouds and being difficult and unreliable to set up. I can confirm!

The first problem is easily nipped in the bud with some judicious network configuration, as I outlined last time. The device works just as well when isolated from the outside world, so there is nothing to fear there.

The second problem is real. Luckily, it's as if they know the default device-finding process will fail (which it did for me the half-dozen times I tried), and they actually support and document an alternative scheme ("AP Mode") which works just fine. Just one thing though - this device seems to have NO persisted storage of its network settings (!) which probably means you'll be going through the setup process a few times - you lose power, you lose the device. Oy.

So once I had the sensor working with its (actually quite decent) Android app, it was time to start protocol-sniffing, as there is no existing binding for this device in OpenHAB. It quickly became apparent that this would be a tough job. The app appeared to use multicast IP to address its devices, and a binary protocol over UDP for data exchange.

Luckily, after a bit more probing with WireShark and PacketSender, the multicast element proved to be a non-event - seems like the app broadcasts (i.e. to 255.255.255.255) and multicasts the same discovery packet in either case. My tests showed no response to the multicast request on my network, so I ignored it.

Someone did some hacks around the Android C library (linked from an online discussion about BroadLink devices) but all my packet captures showed that encryption is being employed (for reasons unknown) and inspection confirms encryption is performed in a closed-source C library that I have no desire to drill into any further.

A shame. The BroadLink A1 sensor is a dead-end for me, because of their closed philosophy. I would have happily purchased a number of these devices if they used an open protocol, and would have published libraries and/or bindings for OpenHAB etc, which in turn encourages others to purchase this sort of device.

UPDATE - FEB 2018: The Broadlink proprietary encrypted communication protocol has been cracked! OpenHAB + Broadlink = viable!

Tuesday, 29 November 2016

Friday, 28 October 2016

Configuring Jacoco4sbt for a Play application

Despite (or perhaps due to) my recent dalliances with React.js, I'm still really loving the Play Framework, in both pure-backend- (JSON back-and-forth) and full-stack (serving HTML) modes. It's had a tremendous amount of thought put into it, it's been rock-solid in every situation (both work- and side-project) I've deployed it, it's well-documented and there's a solid ecosystem of supporting plugins, frameworks and libraries available.

One such plugin is Jacoco4sbt, which wires the JaCoCo code-coverage tool into SBT (the build system for Play apps). Configuration is pretty straightforward, and the generated HTML report is a nice way to target untested corners of your code. The only downside (which I've finally got around to looking at fixing) was that by default, a lot of framework-generated code is included in your coverage stats.

So without further ado, here's a stanza you can add to your Play app's build.sbt to whittle down your coverage report to code you actually wrote:

One such plugin is Jacoco4sbt, which wires the JaCoCo code-coverage tool into SBT (the build system for Play apps). Configuration is pretty straightforward, and the generated HTML report is a nice way to target untested corners of your code. The only downside (which I've finally got around to looking at fixing) was that by default, a lot of framework-generated code is included in your coverage stats.

So without further ado, here's a stanza you can add to your Play app's build.sbt to whittle down your coverage report to code you actually wrote:

jacoco.settings

jacoco.excludes in jacoco.Config := Seq(

"views*",

"*Routes*",

"controllers*routes*",

"controllers*Reverse*",

"controllers*javascript*",

"controller*ref*",

"assets*"

)

I'll be putting this into all my Play projects from now on. Hope it helps someone.

Thursday, 29 September 2016

Push notifications and the endless quest for "rightness"

I recently was tasked with getting a push notification system up and running (e.g. the "unseen" badge count and messaging you see in lots of mobile apps). We had a fairly simple polling-based notification system that worked quite well but we really wanted that next level of connectedness that you get from instantaneous notifications, even when the app is "closed".

My attempt to get this working basically extended the existing system which used a count and some Booleans. After countless hours of trying to get this sync right, I came to a realization:

I had seenLatest, I had hasChanges and even resorted to forceUpdate. There was always a corner-case or timing/sequencing condition that would trip it up. And then I realized that I needed to embrace timing. Each syncable thing has a lastUpdated time and each client has a lastSaw time.

Key for getting this working was remembering to think about this from a user's point of view - they may well be logged in on three devices (aka clients) but once they have seen a notification on any device they don't want to see it again.

The pseudocode for this came down to:

And now it works how it should.

And I see failed attempts to do it correctly everywhere I look ... :-)

My attempt to get this working basically extended the existing system which used a count and some Booleans. After countless hours of trying to get this sync right, I came to a realization:

Trying to keep state by passing Booleans back and forth is like flinging paint at a wall and expecting the Mona Lisa to appear.

I had seenLatest, I had hasChanges and even resorted to forceUpdate. There was always a corner-case or timing/sequencing condition that would trip it up. And then I realized that I needed to embrace timing. Each syncable thing has a lastUpdated time and each client has a lastSaw time.

Key for getting this working was remembering to think about this from a user's point of view - they may well be logged in on three devices (aka clients) but once they have seen a notification on any device they don't want to see it again.

The pseudocode for this came down to:

Polling Loop / When a thing changes

userLastSawTimestamp = max(clientLastSawTimestamps)

if (thing.lastUpdated > userLastSawTimestamp) {

showNotification(thing)

}

On User Viewing Thing (i.e. clearing the badge)

clientLastSawTimestamp[clientId] = now

And now it works how it should.

And I see failed attempts to do it correctly everywhere I look ... :-)

Thursday, 18 August 2016

Deep-diving into the Google pb= embedded map format

Building off the excellent work of Andrew Whitby, I wanted to go further in understanding this unusual format. Specifically because when trying to parse the lat-long of the marker out of it, the least couple of significant digits were always "off", and frustratingly, by a seemingly-random amount.

Let's take a look at a Google "Embed map" URL for a random lat-long. You can obtain one of these by clicking a random point on a Google Map, then clicking the lat-long hyperlink on the popup that appears at the bottom of the page. From there the map sidebar swings out; choose Share -> Embed map - that's your URL.

So this is all very well but it doesn't explain why URLs that I generate using the standard method don't actually put the lat-long I selected into the URL - yet they render perfectly! What do I mean? Well, the lat-long that I clicked on (i.e. the marker) for this example is actually:

Let's take a look at a Google "Embed map" URL for a random lat-long. You can obtain one of these by clicking a random point on a Google Map, then clicking the lat-long hyperlink on the popup that appears at the bottom of the page. From there the map sidebar swings out; choose Share -> Embed map - that's your URL.

"https://www.google.com/maps/embed?pb= !1m18!1m12!1m3!1d3152.8774048836685 !2d145.01352231578036 !3d-37.792912740624445!2m3!1f0!2f0!3f0!3m2!1i1024!2i768 !4f13.1!3m3!1m2!1s0x0%3A0x0!2zMzfCsDQ3JzM0LjUiUyAxNDXCsDAwJzU2LjYiRQ !5e0!3m2!1sen!2sau!4v1471218824160"Well, it's not pretty, but with the help of Andrew Whitby's cheat sheet and the comments from others, it turns out we can actually render it as a nested structure knowing that the format [id]m[n] means a structure (multi-field perhaps?) with n children in total - my IDE helped a lot here with indentation:

"https://www.google.com/maps/embed?pb=" +

"!1m18" +

"!1m12" +

"!1m3" +

"!1d3152.8774048836685" +

"!2d145.01352231578036" +

"!3d-37.792912740624445" +

"!2m3" +

"!1f0" +

"!2f0" +

"!3f0" +

"!3m2" +

"!1i1024" +

"!2i768" +

"!4f13.1" +

"!3m3" +

"!1m2" +

"!1s0x0%3A0x0" +

"!2zMzfCsDQ3JzM0LjUiUyAxNDXCsDAwJzU2LjYiRQ" +

"!5e0" +

"!3m2" +

"!1sen" +

"!2sau" +

"!4v1471218824160"

It all (kinda) makes sense! You can see how a decoder could quite easily be able to count ! characters to decide that a bang-group (or could we call it an m-group?) has finished. I'm going to take a stab and say the e represents an enumerated type too - given that !5e0 is "roadmap" (default) mode and !5e1 forces "satellite" mode.

So this is all very well but it doesn't explain why URLs that I generate using the standard method don't actually put the lat-long I selected into the URL - yet they render perfectly! What do I mean? Well, the lat-long that I clicked on (i.e. the marker) for this example is actually:

-37.792916, 145.015722

And yet in the URL it appears (kinda) as:

-37.792912, 145.013522

Which is enough to be slightly, visibly, annoyingly, wrong if you're trying to use it as-is by parsing the URL. What I thought I needed to understand now was this section of the URL:

"!1d3152.8774048836685" +

"!2d145.01352231578036" +

"!3d-37.792912740624445" +

Being the "scale" and centre points of the map. Then I realised - it's quite subtle, but for (one presumes) aesthetic appeal, Google doesn't put the map marker in the dead-centre of the map. So these co-ordinates are just the map centre. The marker itself is defined elsewhere. And there's only one place left. The mysterious z field:

!2zMzfCsDQ3JzM0LjUiUyAxNDXCsDAwJzU2LjYiRQSure enough, substituting the z-field from Mr. Whitby's example completely relocates the map to put the marker in the middle of Iowa. So now; how to decode this? Well, on a hunch I tried base64decode-ing it, and bingo:

% echo MzfCsDQ3JzM0LjUiUyAxNDXCsDAwJzU2LjYiRQ | base64 --decode 37°47'34.5"S 145°00'56.6"ESo there we have it. I can finally parse out the lat-long of the marker when given an embed URL. Hope it helps someone else out there...

Labels:

google,

googlemaps,

hacking,

maps,

reverseengineering,

web

Saturday, 30 July 2016

Vultr Jenkins Slave GO!

I was alerted to the existence of VULTR on Twitter - high-performance compute nodes at reasonable prices sounded like a winner for Jenkins build boxes. After the incredible flaming-hoop-jumping required to get OpenShift Jenkins slaves running (and able to complete builds without dying) it was a real pleasure to have the simplicity of root-access to a Debian (8.x/Jessie) box and far-higher limits on RAM.

I selected a "20Gb SSD / 1024Mb" instance located in "Silicon Valley" for my slave. Being on the opposite side of the US to my OpenShift boxes feels like a small, but important factor in preventing total catastrophe in the event of a datacenter outage.

Now grab the id_rsa.pub from your Jenkins master's .ssh directory and put it into /home/jenkins/.ssh/authorized_keys. In the Jenkins UI, set up a new set of credentials corresponding to this, using "use a file from the Jenkins master .ssh". (which by the way, on OpenShift will be located at /var/lib/openshift/{userid}/app-root/data/.ssh/jenkins_id_rsa).

I like to keep things organised, so I made a vultr.com "domain" container and then created the credentials inside.

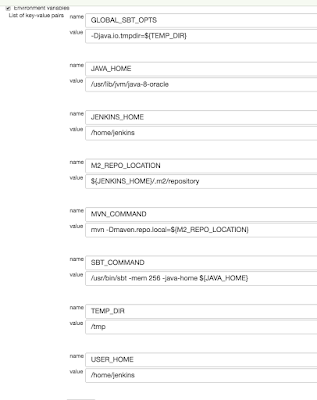

This machine is quite dramatically faster and has twice the RAM of my usual OpenShift nodes, making it extra-important to have those differences defined per-node instead of hard-coded into a job. One thing I was surprised to have to define was a memory limit for the JVM (via SBT's -mem argument) as I was getting "There is insufficient memory for the Java Runtime Environment to continue" errors when letting it choose its own upper limit. For posterity, here are the environment variables I have configured for my Vultr slave:

I selected a "20Gb SSD / 1024Mb" instance located in "Silicon Valley" for my slave. Being on the opposite side of the US to my OpenShift boxes feels like a small, but important factor in preventing total catastrophe in the event of a datacenter outage.

Setup Steps

(All these steps should be performed as root):User and access

Create a jenkins user:addgroup jenkins adduser jenkins --ingroup jenkins

Now grab the id_rsa.pub from your Jenkins master's .ssh directory and put it into /home/jenkins/.ssh/authorized_keys. In the Jenkins UI, set up a new set of credentials corresponding to this, using "use a file from the Jenkins master .ssh". (which by the way, on OpenShift will be located at /var/lib/openshift/{userid}/app-root/data/.ssh/jenkins_id_rsa).

I like to keep things organised, so I made a vultr.com "domain" container and then created the credentials inside.

Install Java

echo "deb http://ppa.launchpad.net/webupd8team/java/ubuntu xenial main" | tee /etc/apt/sources.list.d/webupd8team-java.list echo "deb-src http://ppa.launchpad.net/webupd8team/java/ubuntu xenial main" | tee -a /etc/apt/sources.list.d/webupd8team-java.list apt-key adv --keyserver hkp://keyserver.ubuntu.com:80 --recv-keys EEA14886 apt-get update apt-get install oracle-java8-installer

Install SBT

apt-get install apt-transport-https echo "deb https://dl.bintray.com/sbt/debian /" | tee -a /etc/apt/sources.list.d/sbt.list apt-key adv --keyserver hkp://keyserver.ubuntu.com:80 --recv 642AC823 apt-get update apt-get install sbt

More useful bits

Git

apt-get install git

NodeJS

curl -sL https://deb.nodesource.com/setup_4.x | bash - apt-get install nodejs

This machine is quite dramatically faster and has twice the RAM of my usual OpenShift nodes, making it extra-important to have those differences defined per-node instead of hard-coded into a job. One thing I was surprised to have to define was a memory limit for the JVM (via SBT's -mem argument) as I was getting "There is insufficient memory for the Java Runtime Environment to continue" errors when letting it choose its own upper limit. For posterity, here are the environment variables I have configured for my Vultr slave:

Wednesday, 29 June 2016

ReactJS - Early Thoughts

It is very-much a recruiter's term but I, like many who started out with "back-end" skills, am endeavouring to be a proper Full Stack Developer.

I have always been comfortable with HTML, and first applied a CSS rule (inline) waaay back in the Netscape 3.0 days:

Up to about 2015, I'd been happy enough with the basic tools - JQuery for DOM-smashing and AJAX, Underscore/Lodash for collection-manipulation, and bringing in Bootstrap's JS library for a little extra polish. The whole thing based on full HTML being rendered from a traditional multi-tiered server, ideally written in Scala. I got a lot done this way.

I had a couple of brushes with Angular (1.x) along the way and didn't really see the point; the Angular code was always still layered on top of "perfectly-good" HTML from the server. It was certainly better-structured than the usual JQuery mess but the hundreds of extra kilobytes to be downloaded just didn't seem to justify themselves.

Now this year, I've been working with a Real™ Front End project - that is, one that stands alone and consumes JSON from a back-end. This is using Webpack, Babel, ES6, ReactJS and Redux as its principal technologies. After 6 weeks of working on this, here are some of my first thoughts:

So yep, there were more Goods than Bads - I'm liking React, and I'm finally feeling like large apps can be built with JavaScript, get that complete separation between back- and front-ends, and be maintainable and practical for multiple people to work on concurrently. Stay tuned for more!

I have always been comfortable with HTML, and first applied a CSS rule (inline) waaay back in the Netscape 3.0 days:

<a href="..." style="text-decoration: none;">Link</a>I'm pretty sure there were HTML frames involved there too. Good times. Anyway, the Javascript world had always been a little too wild-and-crazy for me to get deeply into; browser support sucked, the "standard library" kinda sucked, and people had holy wars about how to structure even the simplest code.

Up to about 2015, I'd been happy enough with the basic tools - JQuery for DOM-smashing and AJAX, Underscore/Lodash for collection-manipulation, and bringing in Bootstrap's JS library for a little extra polish. The whole thing based on full HTML being rendered from a traditional multi-tiered server, ideally written in Scala. I got a lot done this way.

I had a couple of brushes with Angular (1.x) along the way and didn't really see the point; the Angular code was always still layered on top of "perfectly-good" HTML from the server. It was certainly better-structured than the usual JQuery mess but the hundreds of extra kilobytes to be downloaded just didn't seem to justify themselves.

Now this year, I've been working with a Real™ Front End project - that is, one that stands alone and consumes JSON from a back-end. This is using Webpack, Babel, ES6, ReactJS and Redux as its principal technologies. After 6 weeks of working on this, here are some of my first thoughts:

- Good ES6 goes a long way to making Javascript feel grown-up

- Bad The whole Webpack-Babel-bundling thing feels really rough - so much configuration, so hard to follow

- Good React-Hot-Reloading is super, super-nice. Automatic browser reloads that keep state are truly magic

- Bad You can still completely, silently toast a React app with a misplaced comma ...

- Good ... but ESLint will probably tell you where you messed up

- Bad It's still Javascript, I miss strong typing ...

- Good ... but React PropTypes can partly help to ensure arguments are there and (roughly) the right type

- Good Redux is a really neat way of compartmentalising state and state transitions - super-important in front-ends

- Good The React Way of flowing props down through components really helps with code structure

So yep, there were more Goods than Bads - I'm liking React, and I'm finally feeling like large apps can be built with JavaScript, get that complete separation between back- and front-ends, and be maintainable and practical for multiple people to work on concurrently. Stay tuned for more!

Thursday, 12 May 2016

Cloudy Continuous Integration Part 2 - Trigger-Happy

In Part 1 of this highly-sporadic series, I specified No Polling as a must-have for your build box. I have seen countless examples where otherwise-great toolchains are let down by dumb polling on behalf of the build server. Even worse is when a heap of jobs all go polling at the same time (e.g. a */5 * * * * cron expression or similar), resulting in terrible load spiking, and unfair (and possibly even wrong) build order.

Why do otherwise-excellent and smart engineers end up doing the kind of dumb polling in Jenkins that would keep them up at night if it was their code? Mainly because historically, it's been substantially harder to get properly-triggered job execution going in Jenkins. But things are getting better. The Jenkins GitHub Plugin does a terrific job of simplifying triggering, thanks to its convention-over-configuration approach - once you've nominated where your GitHub repo is, getting triggering is as simple as checking a box. Lovely.

Now, finally, it seems BitBucket have almost caught up in this regard. Naturally, as they offer (free) private repositories, there is a little bit more configuration required on the SCM side, but I can confirm that in May 2016, it works. There seem to have been a lot of changes going on under the hood at BitBucket, and the reliability of their triggering has suffered from week-to-week at times, but hopefully things will be solid now.

Why do otherwise-excellent and smart engineers end up doing the kind of dumb polling in Jenkins that would keep them up at night if it was their code? Mainly because historically, it's been substantially harder to get properly-triggered job execution going in Jenkins. But things are getting better. The Jenkins GitHub Plugin does a terrific job of simplifying triggering, thanks to its convention-over-configuration approach - once you've nominated where your GitHub repo is, getting triggering is as simple as checking a box. Lovely.

Now, finally, it seems BitBucket have almost caught up in this regard. Naturally, as they offer (free) private repositories, there is a little bit more configuration required on the SCM side, but I can confirm that in May 2016, it works. There seem to have been a lot of changes going on under the hood at BitBucket, and the reliability of their triggering has suffered from week-to-week at times, but hopefully things will be solid now.

The 2016 Way to trigger Jenkins from BitBucket

- Firstly, there is now no need to configure a special user for triggering purposes

- Install the Jenkins BitBucket plugin. For your reference, I have 1.1.5

- In jobs that you want to be triggered, note there is a new "BitBucket" option under Build Triggers. You want this. If it was checked, uncheck the "polling" option and feel clean

- That's the Jenkins side done. Now flip to your BitBucket repo, and head to the Settings

- Under Integrations -> Webhooks add a new one, and fill it out something like this: Where jjj.rhcloud.com is your (in this case imaginary-OpenShift) Jenkins URL.

- Make sure you've included that trailing slash, and then you're done! Push some code to test

Hat-Tips to the following (but sadly outdated) bloggers

Subscribe to:

Posts (Atom)